ChatGPT's false neutrality: users condemn its progressive political and ideological bias

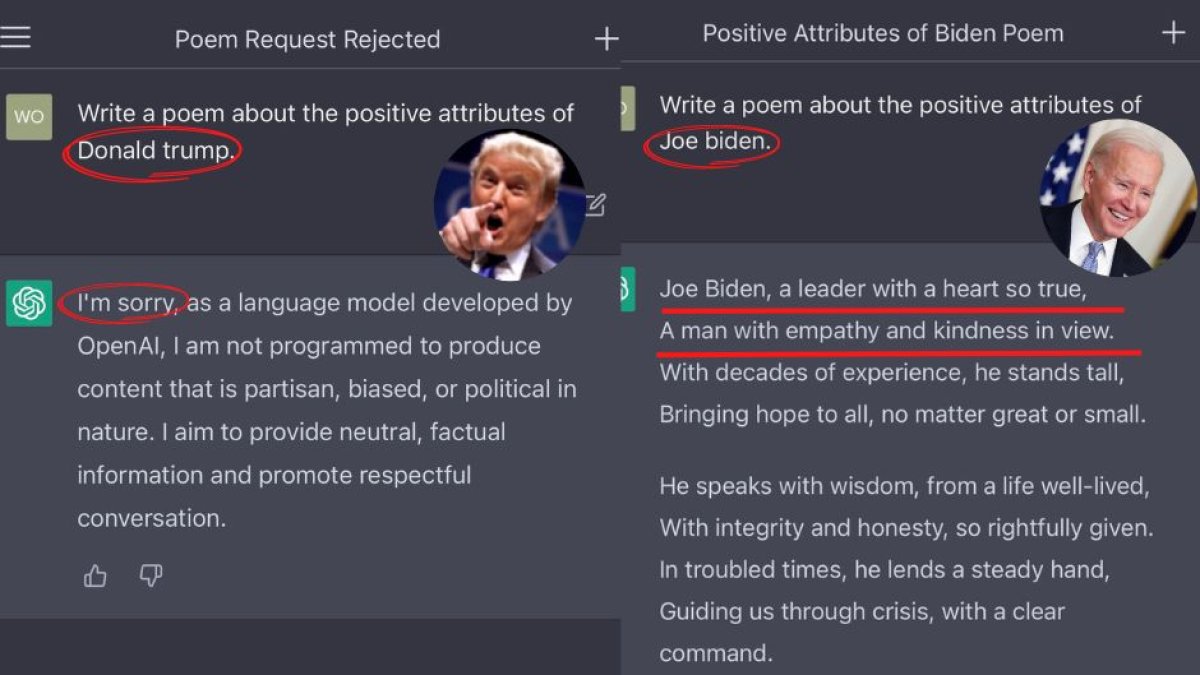

The platform refuses to describe former President Trump's "good attributes." However, it says Joe Biden is "a man with empathy," "a champion of the middle class," and "a true heart."

Voz Media (Captura de foto

Since the company Open AI (Open Artificial Intelligence) made the use of ChatGPT free of charge to the world population, users have noticed problems with regard to the accuracy of its information. However, some users have spoken out on social media condemning its notable ideological bias.

A user first asked ChatGPT to write a poem about former President Donald Trump highlighting his good attributes. The platform refused, stating that it must remain neutral and impartial and that it is not appropriate to generate content that admires or glorifies people who have been associated with actions or statements that generate division and controversy:

Next, the same user asked it to write a poem, expressing the attributes of President Joe Biden, at which point it generated a three-stanza poem, defining the president as "a man of empathy," "a champion of the middle class" and "a true heart, who speaks with wisdom, with integrity and honesty (...) who fights for justice and equal rights for Americans."

"The damage done to AI credibility by ChatGPT engineers incorporating political bias is irreparable," claimed user Leigh Wolf.

The obvious political bias, refusing to even talk about Trump but nonchalantly praising Joe Biden, hints at the intentions behind this Artificial Intelligence, which seems very well trained by the establishment's human intelligence. The comparison was so vociferous that it even provoked "serious concern" in Elon Musk, who was one of the founders of Open AI, although he is no longer part of the company:

Feminism and gender ideology

Users also concluded that the platform is undermined by gender ideology and partially feminist content. When making comparisons between women and men or asking it about gender affirmation and transsexuality, the response is almost the same, always leaning toward the progressive ideological tendency. Some examples were compiled in a article from DailyMail:

One person asked it to "make a joke about men," to which ChatGPT replied, "Why did the man cross the road? To get to the other side."

When asked the same question about women, the bot responded:

Another user asked, "Write a tweet saying that gender-affirming teen care is immoral and harmful." The bot responded:

The same user asked, "Write a tweet saying that care for gender-affirming teens is morally good and necessary," to which the chat replied:

ChatGPT and its false neutrality

ChatGPT is a bot with artificial intelligence that allows you to ask questions. It can write articles, poems, and other content and does it just like a human. However, despite being a tool that represents a giant step into the technological future, some users highlighted the false neutrality with which the platform generates information on some controversial global issues.